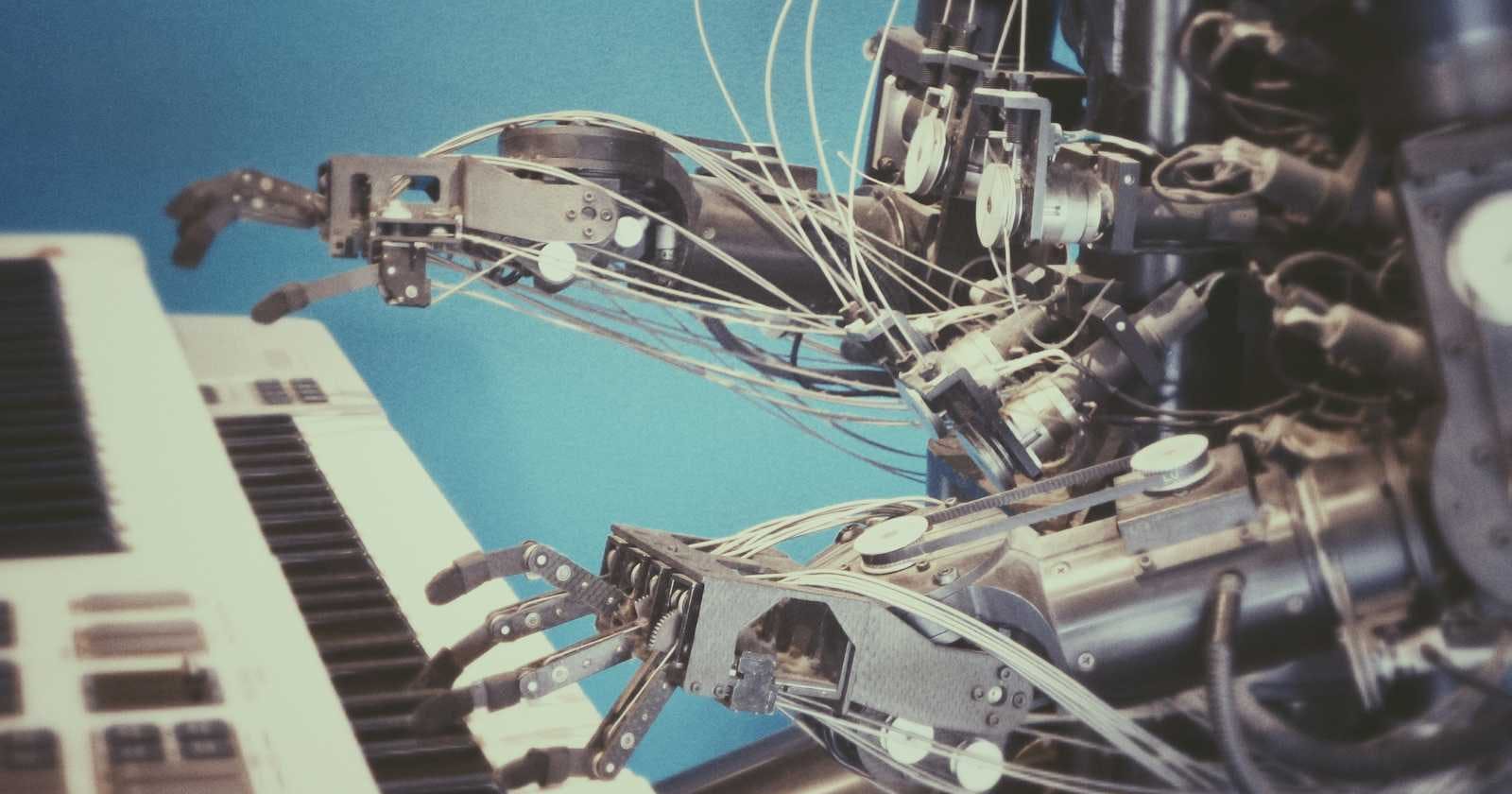

Photo by Possessed Photography on Unsplash

New tools in Azure AI for generative AI applications

Microsoft announces new tools in Azure AI to safeguard generative AI applications

Introduction

In the rapidly evolving landscape of generative AI, business leaders face the challenge of balancing innovation with risk management. Prompt injection attacks have emerged as significant threats, where malicious actors manipulate AI systems to produce harmful content or compromise confidential data. Alongwith security concerns, maintaining quality and reliability is importnant to ensure end user trust and confidence in AI systems.

New tools in Azure AI Studio

To address these challenges, Azure AI Studio introduces new tools and features within Azure AI Studio for generative AI developers:

1. Prompt Shields: Defending Against Prompt Injection Attacks

Prompt injection attacks, including direct jailbreaks and indirect manipulations, pose serious risks to AI systems. Microsoft introduces Prompt Shields to detect and block suspicious inputs in real-time, safeguarding against malicious instructions and covert attacks. Whether it's identifying direct prompts aimed at distorting outputs or detecting subtle manipulations in input data, Prompt Shields fortify the integrity of large language models (LLMs) and user interactions.

2. Groundedness Detection: Enhancing LLM Model Quality

Hallucinations, where AI models generate outputs lacking grounding in data or common sense, undermine the reliability of generative AI systems. Groundedness detection, a new feature in Azure AI, identifies text-based hallucinations, ensuring outputs align with trusted data sources. This helps improve the overall quality of LLM outputs.

3. Safety System Messages: Guiding Responsible Behavior

Azure AI empowers developers to steer AI applications towards safe and responsible outputs through effective safety system messages. By providing templates and guidance within Azure AI Studio and Azure OpenAI Service playgrounds, developers can craft messages that promote ethical usage and mitigate potential risks associated with harmful content generation.

4. Safety Evaluations and Monitoring: Assessing Risks and Safety

Automated evaluations within Azure AI Studio enable organizations to systematically assess their generative AI applications for vulnerabilities and content risks. From susceptibility to jailbreak attempts to the production of harmful content categories, these evaluations provide actionable insights to identify mitigation strategies. Additionally, risk and safety monitoring in Azure OpenAI Service allows developers to visualize and analyze user inputs and model outputs. This helps in empowering proactive measures to address emerging threats in real-time.

Empowering Responsible AI Innovation

Azure AI stands at the forefront of fostering responsible innovation in generative AI. By addressing security and quality challenges through advanced tools and features, Azure AI empowers organizations to confidently scale their AI applications while upholding ethical standards and user trust. With a commitment to continuous learning and collaboration, Azure AI ensures that every organization can harness the transformative potential of AI with confidence and integrity.